Effective debate: in defence of John Hattie

It seems slightly incongruous to be writing a defence of John Hattie. Given that he is one of the most successful researchers in education today, you might reasonably assume that his voice requires no amplification. The winner of numerous awards, Hattie has held a number of professorships, leads a new, multi-million dollar Science of Research Learning Centre at the University of Melbourne, is an ACEL and APA fellow, has authored over 850 papers, a number of books—including Visible Learning (2008)—and was appointed last year as the new Chair of AITSL. However, despite all of this, Hattie’s work remains somewhat divisive.

This piece was prompted by my latest encounter with a statistical critique of Hattie's work that has regularly resurfaced online over the past four years. Originating in a 2011 article by Arne Kåre Topphol, 'Can we trust the use of statistics in educational research?' (Norwegian), the claims it contained reached the English speaking internet through several English-language posts from Norway, including Eivind Solfjell's, 'Did Hattie get his statistics wrong?' (which includes a response from both Hattie and Topphol). The issues raised were then also addressed by others, for example 'Can we trust educational research?' and Snook et. al. (2009) Invisible Learnings?.

Topphol's original article claimed that Hattie had miscalculated one of the statistical measures used in Visible Learning, the Common Language Effect size (CLE). This assertion has been both independently verified and acknowledged by Hattie. For his part, in subsequent remarks, Topphol agreed with Hattie that the CLE was not the main statistical measure relied upon in Hatie's work. Importantly, the miscalculated CLE (a measure of probability) is not the source of Hattie's much-quoted effect sizes.

Two years later, these claims were given a new lease of life in a post titled, 'John Hattie admits that half of the Statistics in Visible Learning are Wrong'. Although all these articles have been widely shared over a number of years, the fragmented nature of social networks means that it is often assumed, by those coming across them for the first time, that the information they contain is either new or is being ignored for some (possibly nefarious) reason. Recently, Dan Haesler wrote about these allegations, which led to an interview and a series of posts in which Hattie categorically denied ever saying that half the statistics in Visible Learning were wrong and argued that the contested CLE could be excised from his book without altering the conclusions he drew "one iota."

From my perspective, this narrow focus on Hattie's statistics, whether by advocates or critics, is misleadingly reductive. Although effect sizes are unquestionably important to his thesis, the resulting work is poorly represented by a few tables.

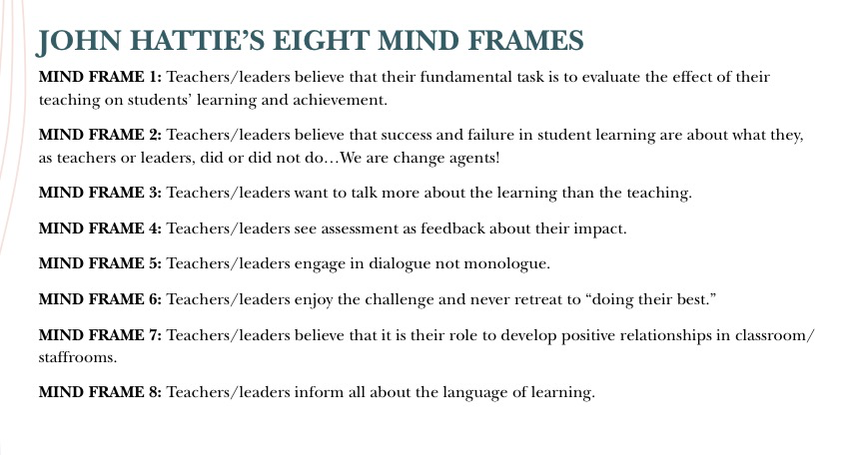

The major argument in this book underlying powerful impacts in our schools relates to how we think! It is a set of mind frames that underpin our every action and decision in a school; it is a belief that we are evaluators, change agents, adaptive learning experts, seekers of feedback about our impact, engaged in dialogue and challenge, and developers of trust with all, and that we see opportunity in error, and are keen to spread the message about the power, fun, and impact that we have on learning — Visible Learning for Teachers (2012), p.159

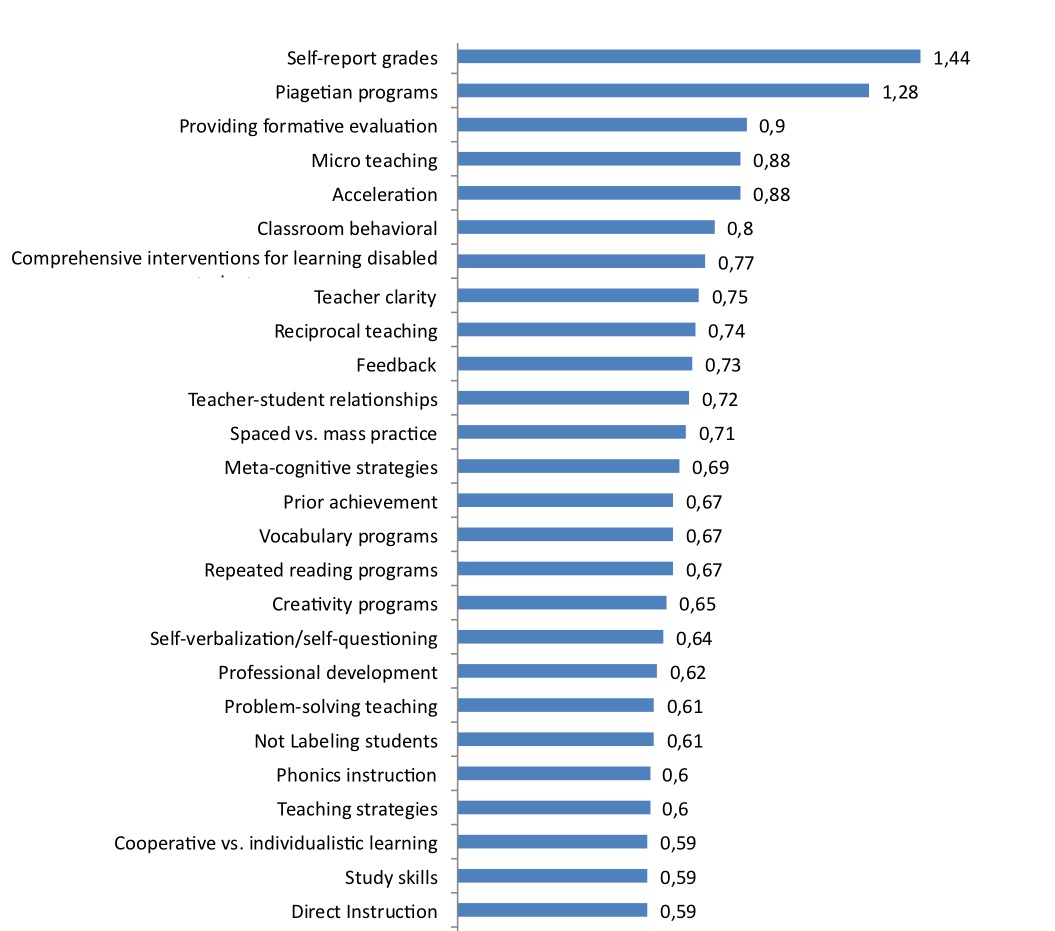

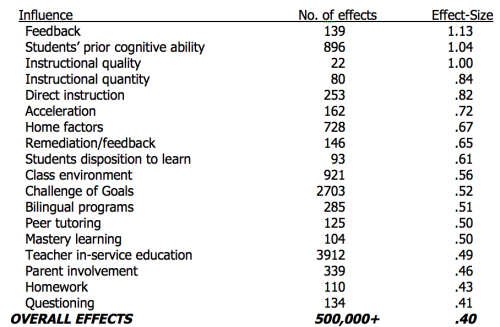

That Hattie sees the crux of his work in these terms may surprise those who have mostly been exposed to his work like this:

Or this:

Some of the information conveyed here his very valuable (for example, the potential power of feedback), some requires elucidation ('direct instruction' does not mean what you think it means) and some requires further analysis (class size!). Regretably, many cannot get past specific issues, such as the results on class size and the way this research has been been leapt upon by those who see increased class sizes as a driver of educational efficiency. This is a shame, as getting caught up in these details is to miss the larger vision described by Hattie's primary injunction (2012, p.vii and then again on 5, 9, 32, 84, 157 & 169):

Know thy impact.

Hattie does not view his meta-anylsis and statistical tables as ends in themselves, as illustrated by this quote from 'In Conversation' (PDF, 2013):

I used to think that the success of students is about who teaches where and how and that it’s about what teachers know and do. And of course those things are important. But then it occurred to me that there are teachers who may all use the same methods but who vary dramatically in their impact on student learning.

This observation led him to identify eight mind frames that he says characterise effective teachers:

I think that many would be surprised to learn that it is these mind frames that constitute the core of Visible Learning for Teachers (2012). Although derived from some of the most academic of research (meta-analyses of meta-analyses), these mindframes suggest an approach to teaching that is very context driven and flexible, driven by adopting a more conscious approach to our work as teachers and a more deliberate, and diligent, effort to gather information about the impact we have on learning. It is perhaps for this reason that I find the controversy of Hattie's work so vexing.

A teacher's scarcest resource is time. It can be difficult, given the constraints, for teachers to engage deeply with research. Subtleties or nuances of arguments laid out across hundreds of pages are often reduced to sound bites and infographics. My concern is that, in imputing motives and narrowing the focus to a single artifact, we risk missing out on the value that may otherwise be gleaned and, instead, end up wasting more of our precious time.